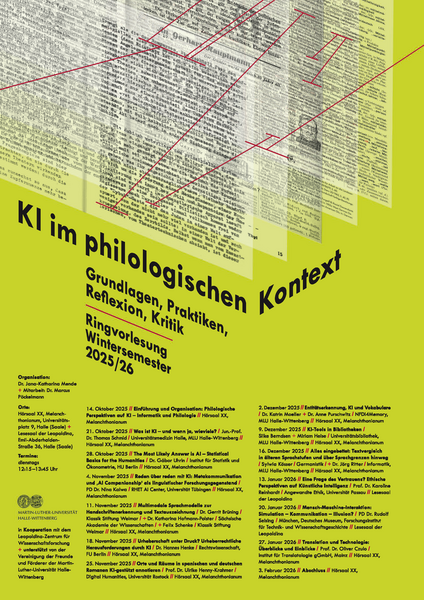

Should we trust AI? And what does trust actually mean in the context of artificial intelligence? Prof. Dr. Karoline Reinhardt addressed these and related questions in her lecture A Question of Trust? Ethical Perspectives on Artificial Intelligence, which she delivered on January 13 as part of the lecture series AI in a Philological Context: Foundations, Practices, Reflection, Critique at Martin Luther University Halle (Saale). The lecture series was organized in cooperation with the Leopoldina Center for Science Studies.

Artificial intelligence poses new challenges to philosophical theories of trust. According to Reinhardt, it is not sufficient to respond to these challenges by retreating to a concept of reliability alone. Rather, trust must also be understood as a political emotion that can help us better grasp the novelty and complexity of AI governance.

In her lecture, Prof. Dr. Karoline Reinhardt referred to the Ethics Guidelines for Trustworthy AI, developed in 2019 by a High-Level Expert Group on Artificial Intelligence (HLEG) on behalf of the European Commission, which identify lawfulness, ethical conduct, and technical robustness as the core principles of trustworthy AI.

In her earlier article Trust and Trustworthiness in AI Ethics (2023), Prof. Dr. Karoline Reinhardt already provides an overview of how concepts of trust have been implemented in existing AI guidelines and argues for drawing on approaches from practical philosophy as well in order to gain a better understanding of trust in the context of AI.

Link to the article Trust and Trustworthiness: https://link.springer.com/article/10.1007/s43681-022-00200-5